I found this data set about college students that had a bunch of demographic info and also details about whether they completed their degree. I'm very interested in the conditions that make it more or less likely for a person to succeed in school, so I wanted to dig in and see how life outside of school possibly affects how a student does in school.

There's been a bunch of research showing how socioeconomic status and parental education levels affect student (and those two variables are ripe for issues of multicollinearity), so I didn't want to reinvent the wheel. Something that intrigued me about this data was a column about marital status. For obvious reasons, that's not something you see a lot in analysis of K-12 education. A student's family of origin often plays a massive part in their success, so I was very curious to see what effect a chosen family member might have.

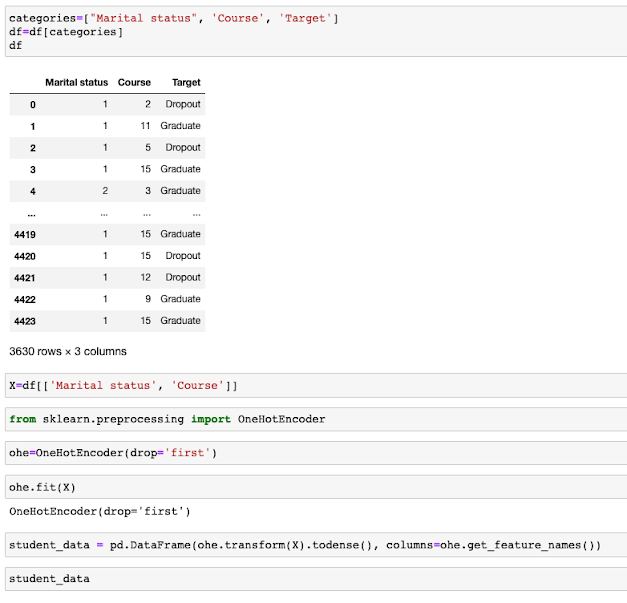

So I had a list of over 3500 students with their relationship and graduation status outlined.

Unfortunately there's no "Married" point on the x or y axis. I'd need to shift my data into something computer-interpretable. And I couldn't just change my "Married" entries to 1s and my "Unmarried" entries to 0s because this data set included 6 different possibilities for "Marital status." And yes, those are numerical values, but they are clearly categorical. Being married is not inherently twice as valuable as being single. So we can't treat those integers like we would regular numbers.

And yes, those are numerical values, but they are clearly categorical. Being married is not inherently twice as valuable as being single. So we can't treat those integers like we would regular numbers.

Enter a heroic class from the sklearn package: OneHotEncoder.

OneHotEncoder takes those pesky categoricals, whether strings or disguised in numeric form, and converts them into something you can use for inferential modeling. It makes multiple columns (in this case, 6 for marital status, since that's how many values we have) and enters a 1 or a 0. This would be wildly time consuming to do ourselves. The OneHotEncoder does it quickly and now the data is so much more usable.

I opted to drop the "Course" columns the OneHotEncoder made because I chose to just focus on marital status. My target was whether the student graduated or dropped out. Those were the only two options in the version of the data set I'd pulled, so I just needed to convert those to 1s and 0s, which I could do with pd.get_dummies (the pandas corollary to OneHotEncoder).

Now I've used two different packages to achieve the same goal, and my data is sufficiently processed to evaluate. I ran a linear regression and scored the result.

Oof. It looks like there is next to no relationship between your relationship and whether you graduate from college.

Of course, there are a million issues with the data analysis I just ran. The vast majority of my data points were single, so I didn't have much information on married students or those in other kinds of relationships. I could have transformed my data to make the marital status information more normal. The type of student who is married is probably different from a single student in lots of other ways. I could have investigated other factors. The data scientist tackling this problem is far from done.

But I got to test out my first question very quickly and easily, thanks to OneHotEncoder.

Comments

Post a Comment